General Selection and Emergence

Change has an easy problem and a hard problem.

The easy problem is how things get better at what they do. We’ve solved this for biology. Natural selection shows how populations adapt through helpful variations, and those changes accumulate over generations.

The hard problem is how novelty arises. How do combined parts create capabilities that none of the parts had alone?

It’s been difficult to study because we can’t see it happening. Processes reorganize internally long before anything shows up on the surface. By the moment we notice the new behavior, the transformation already happened.

The missing piece was always there, hidden by the blindfold. Selection adapts what exists and creates what never existed, operating horizontally and vertically, across meaning and happening.

General Selection drives transformation everywhere: in ecosystems, economies, culture, and minds.

We can now model it as an economic decision. Every process faces a calculable cost-benefit choice between maintaining the current internal state and reorganizing entirely. When the cost of patching exceeds the cost of rebuilding, emergence occurs.

Emergence is the only show in town.

Contents

5.1 Darwin’s Natural Selection

5.1.1 Strengths of Natural Selection

5.1.2 Limitations of Natural Selection

5.1.3 Toward a Broader Framework

5.2 A Theory of General Selection

5.2.1 The Engine of Change

5.3 Emergence Through General Selection

5.3.1 Revisiting Natural Reality

5.3.2 Systemic Evolution

5.3.3 Pathways to Complexity

5.3.4 Beyond Space and Time

5.3.5 Through Orthogonality

5.3.6 Seeing Natural Spaces

5.3.7 Gödel, Darwin, and the Blindfold

5.4 Demystifying Emergence

5.4.1 Working Within the System

5.4.2 Transcending Causal Spaces

5.4.3 The Path to Emergence

5.4.4 Advanced Concepts

5.5 Applications Across Contexts

5.5.1 Evolution of Flight

5.5.2 Antibiotic Resistance

5.5.3 Digital Transformation

5.5.4 Language and Learning

5.5.5 Metacognition

5.5.6 A Unified Understanding

5.6 The Economics of Emergence

5.6.1 Summary

5.6.2 Rules Emerge Through Impedance Application

5.6.3 Affordances

5.6.4 The Stay-or-Go Threshold

5.6.5 Dual-Pathway Resistance Adjustment

5.6.6 Model at a Glance

5.6.7 Operational Cost Functions

5.6.8 Strategic Emergence

5.6.9 Toy Simulation

5.6.10 Universal Emergence Pattern

5.6.11 Distributed Realities as Natural Processes

5.6.12 GameStop Market Transformation

5.6.13 Climate Science Consensus

5.6.14 Estimation Recipe

5.6.15 Threats to Validity

5.6.16 Predictive Power and Applications

5.6.17 Conclusion

5.6.18 Appendix: Sufficient Conditions for Threshold Ordering

5.6.19 Where Disruption Comes From

5.7 Closing Remarks

5.1 Darwin’s Natural Selection

Charles Darwin’s theory of natural selection redefined how we understand evolution. In On the Origin of Species (1859), he showed that populations change as traits that improve survival or reproduction grow more common. Variability introduces traits that persist when they align with environmental demands, while less effective traits disappear.

Natural selection explains adaptation by showing how species adjust to changing conditions. Antibiotic resistance in bacteria demonstrates this: random mutations introduce differences, and selective pressures determine which strains survive and spread. Fossil records support this model, showing how traits accumulate over millions of years, marking transitions between species.

5.1.1 Strengths of Natural Selection

Natural selection provides a clear explanation for adaptation in living systems. It shows how traits develop and persist under environmental pressures, creating a systematic way to understand biological change. From microbial resistance to large-scale evolutionary transitions recorded in fossils, extensive evidence supports its principles.

5.1.2 Limitations of Natural Selection

Despite its significance, natural selection has two key limitations:

- Scope: natural selection deals with biological evolution, but similar selection processes influence other systems like technology, culture, and economies. These contexts display patterns of adaptation and persistence that operate beyond the boundaries of Darwin’s model.

- Emergence: natural selection models gradual change, but it can’t account for emergence, the formation of entirely new forms and behaviors. Evolutionary leaps, like the transition from single-celled to multicellular life or the development of flight, represent transformations that go beyond optimizing existing traits. These changes introduce new ways of existing that Darwin’s framework doesn’t describe.

5.1.3 Toward a Broader Framework

Darwin captured part of the picture. Natural intelligence extends beyond biology, happening wherever patterns persist and remake themselves. Brains and consciousness aren’t required. The capacity is built into how things work.

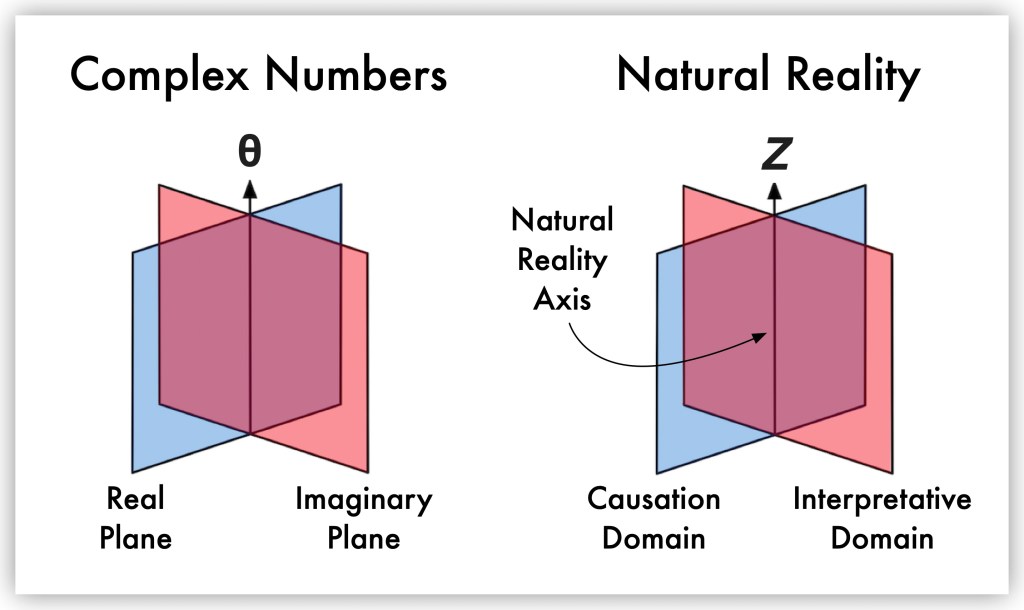

Selection operates in two different realms. In the Causation Domain, processes interact. In the Interpretative Domain, meaning gets assigned and responses get built.

These domains connect through induction, mediated by light, and remain distinct. Selection leads to stability or transformation.

The Natural Reality Axis shows how processes resist the application of the rules governing them. As processes accumulate Incoherence, their impedance changes, leading to either reorganization or the development of new functions. Selection operates in two directions: adapting within current constraints or breaking free to create new possibilities.

5.2 A Theory of General Selection

General Selection explains how processes evolve and transform across all contexts, handling both gradual adaptation and disruptive shifts through the underlying mechanisms of change.

The principles of natural selection apply universally. The same mechanisms that drive persistence and transformation work across biological organisms, technological innovations, and cultural change. New forms and behaviors arise naturally through mechanisms we can understand.

General Selection models evolution as a continuous process within the dual-domain framework of Natural Reality. It bridges the Causation and Interpretative Domains, creating a universal framework for understanding how processes evolve and grow more complex.

Reality evolves incrementally, with changes that look continuous and gradual. Evolution happens through causal mechanisms. Progression in the Interpretative Domain reflects processes adapting through changes in the hidden Causation Domain. Each transformation represents an adjustment in causal impedance, guiding emergence along the Natural Reality axis.

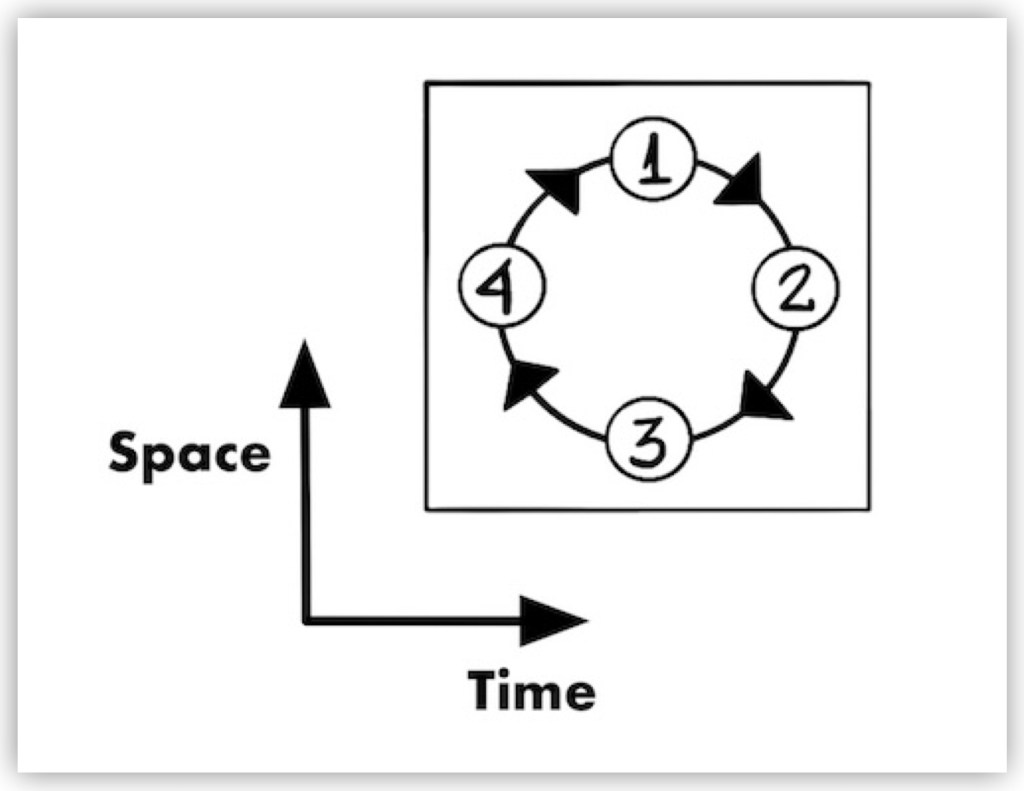

General Selection works in a continuous loop:

Each cycle helps processes harmonize with their environment while maintaining potential for both incremental changes and transformative shifts.

(1) Interaction

The loop begins with interaction, where processes engage with their environment or other processes. These interactions create the conditions for change. Whether gradual or transformative, all changes start from these engagements.

(2) Variability

Variability comes from interactions, introducing new possibilities. This phase includes both small adjustments and larger, more disruptive changes. Variability creates the raw material for selection.

(3) Selection

Selection determines which variations persist and which disappear. This mechanism guides both gradual optimizations and transformative changes. Effective variations that improve alignment with constraints get retained, while less effective changes get filtered out.

For gradual changes, selection provides stability by incorporating incremental adaptations. For transformative changes, selection harmonizes new configurations with existing realities, allowing disruptive innovations to establish themselves.

(4) Accumulation

Accumulation layers retained changes over successive cycles. This process drives both evolutionary refinement and transformation. Gradual adaptations build upon one another, leading to steady optimization, while transformative changes introduce new dynamics that redefine the process.

Accumulation drives evolution through incremental improvements and the incorporation of new capabilities. This shows how General Selection handles both continuity and transformation.

5.2.1 The Engine of Change

General Selection operates as a recursive loop where each step (interaction, variability, selection, and accumulation) feeds into the next, creating a continuous cycle. The mechanism supports both gradual change and transformative emergence, helping processes adapt while building complexity.

The loop fine-tunes processes to improve their fit with environmental constraints and opportunities. The same loop introduces disruptive variability, harmonizes it through selection, and integrates it through accumulation. General Selection handles both incremental refinements and major shifts.

5.3 Emergence Through General Selection

General Selection functions in two directions. Horizontal selection adapts processes within existing constraints. Vertical selection breaks those constraints entirely, producing new possibilities.

The vertical dimension connects to the Natural Reality Axis, where processes move orthogonally beyond their current boundaries.

5.3.1 Revisiting Natural Reality

Variability introduced during interactions influences causal impedance, defining how constraints act upon a process.

Under typical conditions, change (δ) operates within the same plane (n) as decay (λ), remaining aligned with the process’s current Natural Space, where its causal impedance (ZΨ) is fixed. When variability introduces Δ, δ turns orthogonal to λ, and ZΨ moves, allowing the process to sidestep prior limitations.

The process builds new causal connections. Emergence follows. Variability adjusts ZΨ, altering the balance between decay and change. As Ψ’s influence weakens, new pathways for transformation open up.

Incoherence (Δ) measures how a process’s relationship to governing rules changes. When Δ > 0, δ moves orthogonally to λ, allowing the process to bypass direct enforcement of Ψ.

Because Δ is proportional to changes in causal impedance (ZΨ), it drives constraint-breaking and novel configurations.

Distinguishing between typical variability and Incoherence shows how processes evolve through adaptive changes and through new behaviors and forms.

5.3.2 Systemic Evolution

General Selection makes emergence part of ongoing evolution. As processes cycle through selection, retained changes reinforce stability while creating potential for transformation through orthogonal causation that introduces novel capabilities.

5.3.3 Pathways to Complexity

Emergence creates new principles of organization that change how processes behave and interact. These principles develop through General Selection, where incoherent changes get integrated into cohesive frameworks.

New alignments build incrementally, improving how processes handle constraints and opportunities. New organizational layers open possibilities that weren’t accessible before. Both pathways come from the interplay of variability, causal impedance, and harmonization.

Natural intelligence exists everywhere, with the capacity to build from difference and harmonize Incoherence, bringing new worlds into being. Minds don’t have exclusive access.

Emergence is the only show in town.

5.3.4 Beyond Space and Time

Emergence follows a four-step loop: (1) interaction, (2) variability, (3) selection, and (4) accumulation. When viewed from above, this loop appears as a flat circle.

Natural Reality adds another dimension:

Viewed at an angle, the loop shows that causation extends perpendicular to the interpretative plane of time and space.

Along the Causation Axis, Incoherence modulates causal impedance, enabling processes to bypass constraints and reorganize. From this perspective, the loop becomes a spiral, where each cycle expands the process into higher levels of complexity (shown as distinct color layers).

The circle is upward movement.

The orthogonal nature of emergence becomes clear. Because emergence operates within a Causation Domain beyond direct perception, traditional models of evolution only describe selection within a mixed space.

Without recognizing Incoherence as an active mechanism, we see only the outcomes of emergence, not the process that drives it.

The blindfold creates the limitation. From within our Red Spaces, evolution looks linear and gradual because we perceive only the interactions that persist, not the causal phenomena that produce them.

5.3.5 Through Orthogonality

Emergence spirals along the Natural Reality axis. This can be understood mathematically.

Euler’s equation shows how interactions within orthogonal domains enable processes to move across them:

The same principle works in Natural Reality, where selection operates across the Interpretative Domain and the Causation Domain. Just as in complex numbers, where real and imaginary components interact, the Causation Axis (real) and the Interpretative Axis (imaginary) together form the Natural Reality Axis.

- In mathematics, this axis is represented by θ, the angle in Euler’s equation, which governs the relationship between real and imaginary components.

- In Natural Reality, the Natural Reality axis is the medium through which the impedance function operates, integrating causal and interpretative components to determine how processes evolve across Natural Spaces.

Emergence follows a spiral trajectory rather than a linear progression. Selection drives change within a plane and accumulates Incoherence along the Natural Reality axis, changing the process’s impedance and enabling emergence.

5.3.6 Seeing Natural Spaces

Causation isn’t directly perceived. Imagine transparent boxes stacked along the causal component of the Natural Reality Axis.

From above, as in Figure 20, all Natural Spaces appear flattened into a single plane. In Figure 21, the causal component of the Natural Reality Axis becomes visible, showing that what seemed like a flat loop belongs to a larger reality.

In Figure 24, when viewed from the side, each box appears as a distinct layer, just as different Natural Spaces exist in the Interpretative Domain:

The Causation Axis shown above is the real component of the Natural Reality Axis from Figure 20. It exists within the Causation Domain and measures causal impedance.

Processes move along the Natural Reality Axis, but causal impedance gets defined within the Causation Domain, where vertical selection determines how processes resist or adapt to change.

The yin-yang symbol compresses a continuous process into opposing forces. Our perception flattens an open spiral of emergence into a closed evolutionary loop.

Emergence accumulates Incoherence, introducing new layers of complexity. Each cycle builds upon the last, spiraling upward through successive transformations.

We experience reality through the blindfold. We observe Natural Spaces from within our own minds, which blend every space into a flat Red Space, hiding the Causation Axis that shows how processes change. General Selection works across these layers, selecting for harmonization within and across spaces while enabling emergent complexity along the hidden dimension.

Evolution operates as ascending spirals, where each iteration builds upon previous ones to create new forms of harmonization.

5.3.7 Gödel, Darwin, and the Blindfold

Kurt Gödel discovered something unexpected about formal reasoning. In 1931, he proved that any formal process capable of expressing arithmetic would eventually contain statements it couldn’t resolve. If the process remains consistent, it will always be incomplete. If it tries to account for everything, it will contradict itself.

Darwin studied how species adapt through natural selection. Gödel studied how logical systems handle completeness. Both found processes that improve themselves within existing constraints. Darwin showed how traits get better through selection. Gödel showed why formal systems can’t be both complete and consistent.

Neither explained how a process breaks out of its constraints entirely.

The connection reveals itself when we recognize incompleteness as a driver rather than a limitation. When a process encounters something Incoherent from its current perspective, something its existing logic cannot resolve, tension accumulates. The process either remains stuck in repetition or reorganizes its internal framework to accommodate what once seemed impossible.

Each cycle of selection encounters new incompleteness. The process either stagnates or adjusts how it interprets reality. When a new perspective successfully accommodates what was previously Incoherent, it persists. The model stretches. The loop returns at a higher level of organization. What appeared as circular repetition reveals itself as an ascending spiral.

Incompleteness drives emergence. The contradictions that formal systems cannot resolve from within become the pressure that forces processes to transcend their current constraints.

5.4 Demystifying Emergence

General Selection operates within causal spaces. For now, treat a causal space as a simplified Natural Space, where selection governs interactions within a defined set of rules.

An approaching train demonstrates different types of interactions: destructive, constructive, and neutral. The example shows how processes work under stable conditions, then extends beyond coherent interactions to the threshold of emergence. Stepping beyond the train’s causal space requires navigating a new framework. Harmonization determines whether a process collapses or transitions into a new form of persistence.

General Selection optimizes within constraints while also determining when a process must move beyond them, defining the pathways of emergence.

5.4.1 Working Within the System

Imagine standing on a train track with a train rapidly approaching. The train’s immense mass and momentum dominate the interaction. Without intervention, the outcome follows a direct course: a collision that brings the process to a halt.

The impending enforcement of Rules of Causation governs interactions within the causal space. A causal space is like a closed environment where specific rules govern how processes interact. These rules dictate how causes propagate and limit what can be achieved without stepping outside the space.

Interactions confined to a single causal space are known as coherent interactions, aligning with the system’s rules to produce predictable outcomes.

Coherent interactions can be categorized into three types:

- Destructive Interactions: Running toward the train accelerates the collision, increasing the cost of the interaction and ending the process sooner.

- Constructive Interactions: Running away from the train reduces the severity of the collision, extending the process’s duration. It improves outcomes but remains bound by the system’s rules.

- Neutral Interactions: Jumping up and down on the tracks produces nearly the same outcome as standing still, with no significant change to the system or process.

Constructive interactions may extend the process’s permanence, but they leave the underlying rules and the system’s resistance to the interaction, known as causal impedance, unchanged. All coherent interactions are idealized, operating within a space where the process’s causal impedance remains constant.

5.4.2 Transcending Causal Spaces

Incoherent interactions arise when a process steps beyond the limitations of an existing causal space, redefining the relationship between the process and the system.

Imagine stepping off the train tracks entirely. This action removes the train’s influence, eliminating the interaction’s cost. Unlike running faster or standing still, stepping aside creates a new relationship with the system, freeing the process from the train’s trajectory.

By escaping the established causal space, processes can achieve outcomes previously unattainable. However, stepping outside existing rules creates new challenges. Incoherence requires harmonization with its environment to produce sustainable emergence.

5.4.3 The Path to Emergence

Not all incoherent interactions lead to emergence. For emergence to occur, Incoherence must align with the environment in a process known as harmonization.

Consider a train track on a bridge. Stepping off the tracks without a stable platform leads to a fall, ending the process entirely. If a platform exists alongside the tracks, stepping onto it avoids the train’s destructive force while maintaining stability. Here we see Harmonized Incoherence, revolutionary change supported by environmental conditions.

Harmonized Incoherence enables processes to transcend existing constraints, creating new layers of organization and functionality.

In the train example, stepping onto the platform establishes a new causal space, allowing the process to persist and adapt in ways previously impossible.

5.4.4 Advanced Concepts

Emergence happens through a sequence of interactions, often beginning with smaller, less impactful events that prepare systems for major disruptions.

Imagine rolling carts on a train track instead of an oncoming train.

These smaller interactions provide opportunities to explore behaviors that build evolutionary variability, equipping processes to adapt during major disruptions.

When a significant event like the train arrives, harmonized Incoherent behaviors, like stepping onto a stable platform, enable processes to bypass the impact and persist.

Incoherent changes that lack harmonization, like stepping into a void, don’t lead to emergence.

The creation of a new causal space marks a pivotal moment in this process, but it’s only the beginning. When multiple processes enter a new causal space, their interactions define its stability and potential for growth.

Imagine several people stepping onto the platform alongside the train tracks. No longer constrained by the train’s trajectory, they begin to interact with one another, playing by new rules. Together, they form a small system within the new causal space, establishing relationships and patterns unique to their shared environment.

The persistence of this new causal space depends on its ability to remain harmonized with the broader environment. If the participants’ interactions align with external conditions, or if new rules develop that foster coexistence, the causal space can sustain itself and thrive. The shared environment develops its own internal structure, adapting to external influences while maintaining harmonization.

Those on the platform, for example, might establish systems of coordination, share resources, or create rules that promote balance both within the group and with the surrounding systems.

This harmonized persistence enables the new space to bridge to other causal spaces, creating opportunities for larger-scale emergence and systemic transformation.

The distinction between evolutionary and revolutionary change arises from these dynamics. Evolutionary changes occur incrementally, refining existing processes through adjustments that optimize outcomes without altering the underlying rules.

For instance, running faster on the tracks is an evolutionary change. It improves outcomes within the existing framework but leaves the framework itself unchanged.

Revolutionary changes, by contrast, redefine the relationship between the process and the system.

Stepping off the tracks is a revolutionary act, creating a new causal space with new possibilities. Such changes succeed only when harmonized with their surroundings, enabling them to persist and grow.

Evolutionary refinements and revolutionary shifts drive emergence. These changes show how systems navigate periods of stability and transformation, adapting to challenges and opportunities.

In the Blue Space, interactions between processes follow rules we cannot see directly. Yet these rules underlie the dynamics of stability, transformation, and the creation of new possibilities.

5.5 Applications Across Contexts

General Selection happens everywhere. It governs how things adapt and how new things appear. It works the same way in biology, economies, technology, culture, and cognition. The process moves through interaction, variability, selection, and accumulation, shaping both small adjustments and major transformations.

5.5.1 Evolution of Flight

Birds didn’t grow wings overnight. Feathers first developed for insulation or display, but they introduced variability that made new behaviors possible. Some birds used feathers for gliding or balance, increasing causal impedance to environmental challenges like predators. As these changes harmonized with stronger muscles and lighter bones through repeated interactions, they accumulated into the ability to fly. What started as a small change turned into a new way of existing.

5.5.2 Antibiotic Resistance

Bacteria evolve quickly because mutations introduce variability constantly. Some mutations increase causal impedance to antibiotics, allowing bacteria to survive treatment. The widespread use of antibiotics strengthens selection, ensuring that only resistant strains persist. As these strains multiply and interact with different environmental conditions, resistance accumulates, creating bacterial populations that medicine can no longer treat effectively. What was once an isolated mutation turns into the dominant form.

5.5.3 Digital Transformation

The move from physical to digital reshaped entire industries. Online marketplaces eliminated the need for retail spaces. Deep learning made traditional algorithms obsolete. Streaming platforms replaced CDs and DVDs. Each breakthrough forced existing systems to adapt or fade into irrelevance. What began as isolated innovations accumulated into the digital economy we know today.

5.5.4 Language and Learning

Language evolves as new words, slang, and grammar change communication patterns. If a word makes speech clearer, it gains ground against outdated forms. Similarly, learning any skill requires testing different approaches. The methods that work best join the learner’s repertoire. Both processes follow the same pattern: small experiments accumulate into major transformations.

5.5.5 Metacognition

Thinking about thinking operates as its own evolutionary process. People test different ways of solving problems, managing emotions, or making decisions. Effective strategies persist and shape how we reflect, adapt, and improve our thinking. The mind serves as its own laboratory for change.

5.5.6 A Unified Understanding

The same capacity operates in bacteria developing resistance and humans learning new skills. Processes sense their environment, try new approaches, keep what works, and build on previous changes.

Small differences either harmonize with what came before or break away into something new. Whether in biology, economies, technology, culture, or cognition, selection determines what lasts and what disappears. Processes persist when they can adapt, and they transform when Incoherence pushes them past their limits. All evolution, whether physical, social, or mental, works this way.

The examples above show emergence happening everywhere, but they don’t explain when it happens. Why does one bacterial strain develop resistance while another doesn’t? Why does one industry transform while another stagnates? What determines the timing?

Every process reaches a threshold: maintain the current state or reorganize entirely. This threshold is calculable.

The sections that follow formalize when reorganization occurs. Internal reorganization produces new behavior: variability that enters the Blue Space as Incoherence. When this reorganization-driven variability proves disruptive, it triggers selection across populations. Economic emergence feeds General Selection: the threshold model shows when processes reorganize, and that reorganization produces the Incoherence that drives population-level transformation.

We model emergence as an economic threshold where the cost of patching exceeds the cost of rebuilding. The mathematics are detailed because they need to be. This framework turns emergence from mystery into prediction. If the formalism feels dense, focus on the examples: GameStop’s market transformation and climate science consensus formation show the model working in real contexts.

What follows is the first economic model of emergence.

5.6 The Economics of Emergence

Natural processes reorganize when maintaining their current organizational state costs more than rebuilding. The child process who abandons equality rules for equity rules, the market processes that move from institutional control to retail coordination, the scientific processes that adopt new paradigms. These reorganizations happen when the economics tip, following the path of least resistance as naturally as water flows downhill. External disruptions create conditions that change the economics driving internal reorganization.

Natural emergence operates orthogonally: external disruptions influence the cost structure of internal calculations, while processes follow optimization dynamics toward lower-cost configurations.

Section 5.6.19 demonstrates both mechanisms operating together in one example. The formalism that follows makes that demonstration calculable.

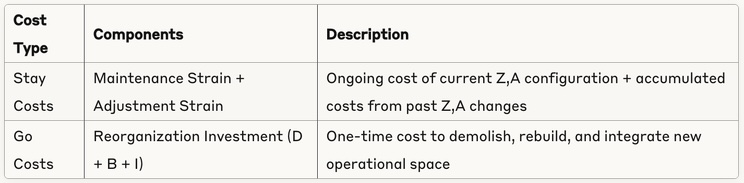

5.6.1 Summary

In the sections below, we model emergence as reorganization that occurs when rebuilding costs less than maintaining. A process’s internal state is summarized by two resistance parameters: impedance Z (resistance to applying its own rules) and attachment A (commitment to expectations). External disruptions only matter insofar as the process’s affordances make them legible, producing an effective gap g. While the process remains in its current organization it performs local adjustments, following whichever pathway (changing Z or A) minimizes adjustment cost. The total stay cost equals ongoing maintenance strain m(Z,A) plus accumulated adjustment strain from past changes. Emergence occurs when total stay cost exceeds reorganization cost R. We give practical definitions for all terms and show calibration walkthroughs in two domains (financial coordination; knowledge consensus). A toy simulation illustrates the threshold crossing and yields testable signatures for prospective prediction. The framework turns emergence timing into an econometric threshold problem with clear measurement, estimation, and intervention levers.

5.6.2 Rules Emerge Through Impedance Application

Before examining choices, we must understand how rules operate in natural processes. A rule is whatever takes a cause to an effect, the mechanism that transforms input states into output states. Rules are dynamic processes that generate different outcomes based on how they are applied.

Each process contains its own rules and applies them with varying degrees of resistance called impedance. Impedance determines what emerges from rule application. When a process applies its own rule with impedance Z = 1.0, the rule produces its standard effect. When Z < 1.0, the process offers less resistance and the rule’s effect amplifies. When Z > 1.0, the process offers more resistance and the rule’s effect dampens. This impedance level determines what emerges from the rule’s operation.

This impedance-mediated application operates continuously. The same rule applied with different impedance levels generates different outcomes. A process grows more or less subject to its own rule based on its impedance level. Higher impedance means more immunity to its own rule application. Lower impedance means the process grows more subject to its own rule application. This creates what looks like “different rules” while remaining the same underlying mechanism operating with different resistance characteristics. This principle underlies Natural Reality: rules emerge through their impedance-modulated application.

For example, a fairness rule applied with very low impedance (Z = 0.2) might generate “all mine!” allocation as the process offers almost no resistance to its immediate self-interest, while the same fairness rule applied with standard impedance (Z = 1.0) might generate automatic 50-50 splitting, and with higher impedance (Z = 1.8) might generate context-dependent equity allocation as the process dampens its immediate impulses through greater resistance. The rule itself is the mechanism that takes input (contributions, context) to output (allocation decision), but impedance determines how much the process resists its immediate response, allowing more sophisticated outcomes to emerge.

The principle directly connects to orthogonality and selection. The orthogonal relationship between external disruptions and internal reorganization decisions exists precisely because rule application depends on internal impedance rather than external force. External signals can only influence rule emergence through the process’s own impedance-modulated interpretation and application mechanisms. General selection determines what emerges by favoring impedance configurations that generate sustainable rule applications under current conditions. Impedance levels that produce viable outcomes persist, while those that generate unsustainable outcomes face pressure to reorganize.

The optimization challenge follows. Since different impedance levels generate different outcomes from the same underlying rule, processes continuously face the question of whether to maintain current impedance configurations or invest in reorganization to establish new impedance configurations that might generate more viable rule applications. This creates the core threshold that determines when strategic emergence occurs.

5.6.3 Affordances

External disruptions operate in a different dimension from internal reorganization decisions, like frequency and time, they affect each other but remain independent axes. Disruptions create signals in the environment, but these signals must first be interpreted by individual processes before becoming part of their internal calculations. Some processes won’t even perceive certain disruptions because they lack the affordances to pick up and interpret particular environmental signals in useful ways.

The orthogonal relationship derives from Natural Reality, where processes operate independently with their own internal states while interacting through a shared external causal domain. Affordances represent the capacity to detect and meaningfully process specific types of environmental signals in this shared domain. Without appropriate affordances, external signals remain invisible or meaningless to the process, creating no internal pressure for reorganization.

The sequence operates as follows: External disruptions create uninterpreted signals in the environment. The process’s affordances determine which signals can be detected and processed. Those detected signals get interpreted through the process’s existing frameworks. Finally, the interpreted signals trigger internal calculations that may lead to strategic reorganization decisions.

Identical external disruptions produce different responses across processes. Each process has different affordances for interpreting disruptions and makes its own calculation about reorganization. Affordances map disruptions into effective gaps and also reduce the variance (and often the level) of adjustment costs via better targeting

5.6.4 The Stay-or-Go Threshold

Every natural process operates by enforcing rules from the inside while maintaining expectations about outcomes. These processes naturally acquire specific causal impedance levels (resistance to applying their own rules) and attachment levels (investment in expectations) through their experience. Maintaining these configurations comes with costs, and changing them also incurs costs. The balance between these costs determines when reorganization occurs.

Processes continuously respond to the balance between current maintenance cost and reorganization cost. They reorganize when the economics tip, following the path of least resistance toward lower-cost configurations.

When a process stays in its current state, it faces ongoing maintenance costs from the energy required to maintain current impedance and attachment levels, plus adjustment costs from the energy required to modify impedance or attachment when gaps arise between interpreted signals and expectations.

When a process reorganizes and abandons its current state, it faces reorganization costs from the investment required to demolish current structure, build new capabilities, and integrate them into a functional whole.

The total cost of staying (maintenance plus accumulated adjustments) determines when reorganization occurs. When staying costs more than reorganizing, the process follows the lower-cost path.

5.6.5 Dual-Pathway Resistance Adjustment

When processes stay in their current configuration, they still face gaps between interpreted signals and expectations that require local adjustments. This creates dual-pathway optimization where the process follows whichever method costs less to close gaps.

The first pathway involves impedance modification, which modifies the process’s resistance to applying its own rules. Higher impedance means the process dampens its rule application through greater resistance, while lower impedance means the process amplifies its rule application through less resistance. Each impedance change creates Incoherence as I = |ΔZ|, the same measure introduced in Chapter 4 where Δ = Z_Ψ(n+1) – Z_Ψ(n).

Chapter 4 showed that Incoherence drives orthogonal transformation. Here we see one of its sources: when processes modify impedance, they produce Incoherence. Variability from interactions and mutations produces it. Internal reorganization produces it. The process acts differently in the Blue Space, creating variability that feeds back into the General Selection loop. Small impedance adjustments produce small variability. Reorganization that crosses the economic threshold produces large impedance changes, generating the Incoherence that enables emergence into new Natural Spaces and triggers selection across populations.

The second pathway involves attachment modification, which modifies how strongly expectations influence operations. Higher attachment means strong expectation influence and resistance to change, while lower attachment means weak expectation influence and easy modification.

At each gap, processes follow whichever pathway costs less energy, creating sophisticated resistance adjustment patterns that accumulate strain over time until reorganization occurs.

5.6.6 Model at a Glance

Since rules emerge through impedance-modulated application, we model process state with impedance Z and attachment A as the variables determining characteristics. Having established the conceptual framework, we now formalize the cost structure and threshold mechanics.

Our model uses impedance Z_t and attachment A_t as state variables, with interpreted signal s_t, expectation e_t, and gap g_t = |s_t – e_t| as inputs. Maintenance cost follows M_t = m(Z_t, A_t; ξ), while adjustment costs follow C^Z_t = |ΔZ_t| · d_Z(Z_t) · κ for impedance changes and C^A_t = |ΔA_t| · d_A(A_t) · ρ for attachment changes. Reorganization cost equals R = D + B + I representing demolition, building, and integration costs respectively.

Core Decision Rule:

if S_t > R:

initiate_strategic_emergence()

where S_t = M_t + ΣC_i

The emergence probability follows the soft version π_t = σ((M_t + ΣC_i) – R) where σ(x) = (1 + e^(-x))^(-1) is the sigmoid function.

All state and proxy variables are min–max normalized within domain and time window.

It is important to note that ‘t’ here does not represent time. The model does not require a clock or continuous flow of moments. Instead, t indexes the ordered sequence of adjustments a process makes in response to disruptions. What matters economically is the accumulation of strain across iterations. Whether we count in seconds, cycles, or events, the tipping point occurs when the cumulative cost of staying exceeds the one-time cost of reorganizing.

5.6.7 Operational Cost Functions

The mathematical framework requires specific functional forms for each cost component to make the framework practically useful. We design these functions to capture realistic trade-offs while remaining mathematically tractable.

Maintenance strain follows a well-behaved pattern that is both increasing and convex:

M_t = m(Z_t, A_t; ξ) = α·Z_t² + β·A_t²·(1 + disruption_intensity·affordance_sensitivity)

This captures the intuition that extreme impedance or attachment configurations become increasingly expensive to maintain, especially under environmental disruption.

Adjustment costs follow a monotone pattern where changes become harder when starting from extreme values:

C^Z_t = |ΔZ_t|·d_Z(Z_t)·κ

C^A_t = |ΔA_t|·d_A(A_t)·ρ

where:

d_Z(Z_t) = γ_Z(1 + Z_t) (increasing change difficulty)

d_A(A_t) = γ_A(1 + A_t) (increasing change difficulty)

Reorganization cost equals R = D + B + I where D represents demolition cost, B represents building cost, and I represents integration cost. Total “stay” cost equals S_t = M_t + ΣC_i.

We can prove that if m is convex in (Z,A) and d_Z, d_A are increasing, then the set {(Z,A): M_t + ΣC_i ≤ R} is convex, which means the “stay” region shrinks monotonically as gaps persist.

5.6.8 Strategic Emergence

Emergence follows directly from the cost comparison: when S_t > R, reorganization occurs. Strategic emergence involves abandonment of current state where processes suspend normal resistance constraints, explore alternative configurations, and assemble new capabilities.

Understanding why emergence is hard requires recognizing that processes can’t simply throw out rules and start fresh because reorganization has real costs. The reorganization payoff comes from post-emergence reduction of maintenance strain; we do not assume performance gains elsewhere. The accumulated strain from maintaining and adjusting current impedance/attachment makes the current state increasingly expensive, but reorganization also requires significant investment. Emergence occurs when the economics finally favor reorganization.

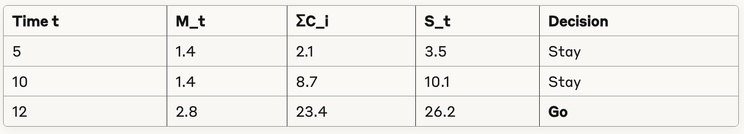

5.6.9 Toy Simulation

A toy simulation helps illustrate the core mechanism in its simplest possible form. This minimal example serves as a calibration template showing how the theoretical framework translates into concrete calculations.

We initialize with Z_0 = 1.0, A_0 = 0.8 and set m(Z,A) = 0.6Z² + 0.8A². Gaps arrive with a step-increase at t* = 10, where g_t = 0.1 for t < 10 and g_t = 0.5 for t ≥ 10. At each step we compute C^Z_t to reduce Z by δ_Z ∝ g_t, or C^A_t similarly, then pick the cheaper option. We set reorganization cost R = 25.0.

What drives the tipping point can be found in this progression. The threshold crossing at t = 12 occurs because the step-increase in gaps forces rapid impedance adjustments that accumulate expensive adjustment strain. Even though maintenance strain M_t increases only modestly, the accumulated adjustment costs ΣC_i shoot up dramatically as the process struggles to close large gaps through local resistance modifications. When total stay cost S_t = M_t + ΣC_i finally exceeds reorganization cost R = 25.0, emergence occurs.

Emergence occurs when the cumulative cost of patching the current configuration exceeds the investment required to build a new one from scratch.

5.6.10 Universal Emergence Pattern

Both calibration cases demonstrate an identical three-phase sequence that appears universal across distributed natural processes. The pattern suggests that emergence timing follows predictable logic rather than random external events, making intervention and prediction possible through strain monitoring and threshold management.

- Phase 1 – Early Stay: Moderate maintenance strain and manageable adjustment costs from local resistance modifications keep total cost below reorganization threshold.

- Phase 2 – Strain Accumulation: Rising maintenance strain makes the current impedance/attachment configuration more expensive to sustain, plus increasing adjustment strain from repeated resistance modifications creates pressure, but staying remains cheaper than reorganizing.

- Phase 3 – Economic Tipping Point: High accumulated adjustment strain from continuous patching efforts finally pushes total stay cost above the reorganization threshold, triggering emergence as reorganization becomes the lower-cost path.

5.6.11 Distributed Realities as Natural Processes

The following case studies on financial coordination in the GameStop short squeeze and the formation of climate science consensus are not natural processes in the strict physical sense. They exist as distributed realities sustained through the interaction of many minds and signals. For the sake of modeling, we treat them as approximations of a Natural Space. They have enough variability and enough interactions to behave in ways that echo Natural Reality. Unlike the universe, which unfolds through limitless interactions, these spaces are bounded by the number of participating minds and the channels that carry their signals. Yet at human scale, the resemblance is close enough to be useful.

5.6.12 GameStop Market Transformation

The 2021 GameStop short squeeze demonstrates the universal emergence pattern in financial coordination processes. This calibration walkthrough shows how one would compute the quantities from observable proxies, though we do not claim ex-ante measurement here. The values below are calibration exemplars designed to illustrate the methodology rather than definitive measurements.

Retail coordination on Reddit created external signals that affected institutional processes possessing affordances for detecting social media coordination. We use parameter calibration with α = 0.8, β = 1.2 as domain coefficients for financial markets, γ_Z = 1.5, γ_A = 2.0 as baseline change difficulties, and κ = 40, ρ = 0.54 as cascade/coordination factors.

During January 4-11 (Phase 1 implementation), impedance measurement showed institutional response delay of 2 days versus 1 day baseline, giving Z_t = 2.0. Attachment measurement showed 85% fundamental analysis coverage, giving A_t = 0.85. Maintenance strain calculated as M_t = 0.8·(2.0)² + 1.2·(0.85)²·1.12 = 4.07. Reddit coordination created gap g_t = 0.3. The choice favored attachment adjustment as cheaper (C^A_t = 2.0 versus C^Z_t = 36.0). Stay cost S_t = 4.07 + 2.0 = 6.07 compared to reorganization R = 78.8 kept the process in its current state.

During January 20-26 (Phase 3 realization), multiple rapid adjustments occurred with impedance series 2.0 → 0.5 and attachment series 0.85 → 0.20. Accumulated adjustment strain reached 246.3 from continuous resistance modifications. Stay cost became S_t = 246.8 compared to reorganization R = 78.8, triggering reorganization.

Post-emergence, institutional processes adopted “retail coordination” space with transformed impedance/attachment characteristics enabling social media sentiment trading and coordinated retail action.

5.6.13 Climate Science Consensus

Scientific processes from 1988-2010 demonstrate the same universal pattern in knowledge creation domains. We use parameter calibration with α = 0.5, β = 0.8 as scientific domain coefficients and γ_Z = 1.8, γ_A = 1.2 as scientific change difficulties. Note that the absolute numbers differ from the GameStop case because different domains have different cost scales, but the logic remains identical.

The phase timeline shows 1990 (Phase 1) with high maintenance strain (21.6) from extreme impedance/attachment configuration and minimal adjustment strain, leading to Stay (21.6 < R = 49.7). By 2001 (Phase 2), moderate maintenance strain (8.1) plus moderate adjustment strain (15.6) from evidence-driven modifications still led to Stay (23.7 < 49.7). By 2007 (Phase 3), low maintenance strain (0.8) but high accumulated adjustment strain (52.3) from continuous evidence pressure triggered Go (53.1 > 49.7).

Emergence occurs when total stay cost exceeds reorganization cost, regardless of the absolute scale. In GameStop, the scale was hundreds of cost units; in climate science, the scale was tens of cost units. Both domains follow identical logic, but their cost structures reflect different environments. Financial markets involve higher coordination costs and cascade effects, while scientific consensus involves lower adjustment costs but longer time horizons.

Post-emergence, scientific processes adopted “anthropogenic causation” space enabling paleoclimate integration, attribution studies, and policy-relevant risk assessment.

5.6.14 Estimation Recipe

The estimation recipe provides a systematic approach for calibrating this framework to new domains and making it practically useful for prediction and intervention. The goal is to transform the theoretical model into a practical tool that can be applied to real-world processes across different contexts.

- Step 1 involves proxy selection, where we map impedance Z to response latency ratio, attachment A to share of defense resources, gap g to normalized anomaly score, and maintenance M via Z², A² with domain coefficients. The key is finding measurable proxies that reliably indicate the underlying resistance parameters.

- Step 2 involves identification, where we estimate d_Z, d_A by regressing realized adjustment choices on g, current Z, A, and context. We ensure monotonicity constraints via isotonic or shape-constrained regression to maintain the theoretical property that adjustment costs increase with extreme values.

- Step 3 involves threshold fit, where we choose R to minimize misclassification of observed reorganizations and report ROC/AUC for π_t. The approach calibrates the reorganization threshold to empirical emergence events.

- Step 4 involves prospective testing, where we pre-register a tipping criterion M̂_t + Σ Ĉ_i > R̂ on a fresh series such as classroom learning or change. This validates the framework’s predictive power on new data.

5.6.15 Threats to Validity

Several methodological challenges must be addressed when applying this framework empirically. Proxy choice sensitivity means that different measurements of impedance and attachment could yield different results, requiring robust validation across multiple proxy specifications. Simultaneity creates identification problems because gaps both cause and are caused by Z, A movement, requiring instrumental variables or other causal identification strategies. Confounding shocks to R mean that reorganization costs may change due to unobserved factors, potentially biasing emergence predictions.

The framework provides structure for these econometric challenges rather than resolving them definitively. Careful identification strategies, robustness checks, and replication across contexts will be necessary to establish the framework’s empirical validity.

5.6.16 Predictive Power and Applications

The framework transforms emergence prediction by providing definitions for all variables, enabling quantitative calculation of “Should I Stay or Should I Go?” mechanics in real-time. The three levers are: reducing maintenance strain, raising reorganization costs, and offering alternative adjustment mechanisms.

This approach transforms emergence timing into a measurable threshold problem with clear measurement, estimation, and intervention levers, enabling practical applications in process design, intervention timing, and strategic transformation management across biological, cognitive, and social domains.

By understanding the logic underlying natural process reorganization, we gain new tools for predicting, facilitating, and managing transformation in complex adaptive processes.

5.6.17 Conclusion

Emergence is a calculable phenomenon. By quantifying the costs of staying versus going, we transform what looked unpredictable into a measurable threshold problem. New possibilities open for anticipating transformations, designing interventions, and creating conditions that favor beneficial emergence while preventing harmful reorganization.

Mathematical formalization of stay-vs-go mechanics demonstrates how processes reorganize optimally based on the cost differential between maintaining current state and rebuilding entirely. The threshold operates consistently whether experienced as sudden insight or gradual inevitability. The framework turns emergence timing into a practical tool for prediction and intervention across diverse domains.

Understanding that rules emerge through impedance-modulated application within processes provides the basis for everything else. When we recognize that processes continuously respond to the balance between maintenance and reorganization costs, emergence follows as natural optimization. The mathematics simply formalizes what natural processes have been doing all along, choosing the most economical path forward given their current constraints and available alternatives.

5.6.18 Appendix: Sufficient Conditions for Threshold Ordering

Let m(Z,A) = αZ² + βA², d_Z(Z) = γ_Z(1+Z), d_A(A) = γ_A(1+A), κ,ρ > 0. For any nondecreasing sequence of gaps {g_t} and adjustment step sizes |ΔZ_t|, |ΔA_t| ∝ g_t, the cumulative stay cost S_T = M_T + Σ_{t≤T}(C^Z_t + C^A_t) is increasing and convex in T. Hence, for any fixed R there exists T* such that S_T ≤ R for T < T* and S_T > R for T ≥ T*. (Proof sketch: convexity of m and linear-in-step costs implies subgradient monotonicity; apply standard crossing lemma.)

5.6.19 Where Disruption Comes From

Economic emergence produces disruption that triggers General Selection. When a process crosses its threshold and reorganizes, it creates new behavior in Blue Space. If disruptive enough, this behavior becomes selection pressure that filters surrounding processes. But disruption also arrives from outside the population entirely, through Blue Space changes unrelated to any internal reorganization. Both sources operate through the same General Selection mechanism. An example shows how they work together.

Media companies in the 1990s and 2000s operated under two governing rules. First: content delivery requires brick-and-mortar retail locations. Second: content delivery requires physical media. Blockbuster maintained low impedance to both rules, fully subject to their constraints. Netflix built high impedance to the first rule from inception, then accumulated capacity to break the second rule later. These trajectories determined who survived.

Netflix launched in 1997 with mail-order DVD rental, violating the brick-and-mortar rule immediately with high impedance to retail infrastructure from day one. The company was incoherent relative to the dominant industry model, operating under a different relationship to the first constraint.

Blockbuster recognized the threat and patched continuously between 1998 and 2004 with late fee forgiveness programs, Blockbuster Online mail service, and kiosk experiments as attempts to compete with Netflix’s mail model while maintaining physical stores. But Blockbuster built no orthogonal capacity, keeping every response within the existing framework where stores remained central and the DVD rule remained unquestioned.

While running a successful DVD-by-mail business between 2000 and 2007, Netflix built streaming infrastructure with content licensing deals for digital delivery, recommendation algorithms, and server capacity all orthogonal to their current operations. They were accumulating Incoherence relative to the physical media rule while that rule still governed their revenue.

Then external disruption arrived when bandwidth infrastructure improved between 2005 and 2007, built by telecom companies outside the media industry, making streaming technically viable. This change came from outside the population being selected, from adjacent infrastructure development that made streaming possible at scale.

5.7 Closing Remarks

Change happens through General Selection.

It drives how natural processes adapt within existing constraints and transform by changing their relationship with those constraints. Every process cycles through interaction, variability, selection, and accumulation. This four-step loop creates both gradual adaptation and emergence.

Variability comes from many sources. External interactions produce it. Random mutations produce it. Internal reorganization produces it. Netflix’s threshold crossing generated streaming behavior, Incoherence that disrupted an entire industry. Blockbuster built no Incoherence and died. Early streamers built incompatible Incoherence and died. Netflix built harmonized Incoherence and survived their own disruption.

When processes cross economic thresholds, they generate new behavior in Blue Space. If disruptive enough, this behavior triggers selection across populations, filtering based on who built harmonized capacity.

Selection pressure arises when processes reorganize and create disruptive Blue Space behavior, as Netflix did. It also arises from Blue Space phenomenon unrelated to any process in the affected population: resource scarcity, climate shifts, regulatory changes, technological breakthroughs, asteroid impacts. General Selection operates the same way regardless of context.

Economic emergence explains when processes reorganize. General Selection explains what happens when that reorganization creates disruption. The threshold model calculates the timing. The selection mechanism determines who survives.

Natural intelligence works at every scale via this mechanism. Processes accumulate strain until reorganization becomes favorable, produce new variability through that reorganization, and face selection based on whether their accumulated Incoherence harmonizes with resulting conditions.

General Selection determines what changes. Light determines how influence propagates.